Being honest about AI services: How much do users need to know?

As you may have seen, Caution Your Blast Ltd (CYB) is currently working with the Foreign, Commonwealth and Development Office (FCDO) on an exciting project that involves engineering Large Language Model (LLM) solutions.

Together, we’re building an artificial intelligence (AI) enabled service that will help British people get information quickly and easily. There’s so much to consider – watch CYB’s Head of Engineering, Ali Salaman, giving an outline of the project.

As a Content Designer at CYB, I’ve been thinking a lot about how, where and why content fits into user-centred AI design. We know an important part of this project is that people need to trust they’re getting the right information from the UK Government. As a team, we’ve been asking one question in particular – do users need to know the project uses AI?

A project recap – AI helps people help themselves

At the moment, if people need help from the FCDO about living or travelling abroad, they can send an online enquiry. An agent at a contact centre responds by email within a few hours or days with the most relevant templated answer from a database of pre-written content.

Our AI-assisted tool will step in before someone sends an enquiry and try to find what they need instantly. When someone asks a question (or questions), the tool interprets what people are looking for, matches their question to the most relevant template and returns it straight away.

Although the tool uses generative AI, it’s not creating new content – there’s no risk that it’ll invent its own answers or give people incorrect information. We’re using it to do the thinking, not the speaking, as CYB’s associate AI Solutions Architect David Gerouville-Farrell shares in this video.

It’s not a search, either - the LLM is not trawling the internet and returning results. This tool does what the FCDO contact centre staff do, but without the wait. Ultimately, it’ll give contact centre staff space to support more vulnerable people.

What do users need to know about AI?

As part of the project, we’ve talked a lot about how much we should tell people about the tech we use, and how that knowledge might affect the way they feel about and use our service. Do users need to know that the project is built using LLM tools? And what difference does it make if they do?

My first thoughts about revealing what’s behind the magical AI curtain was: why tell people anything? We wouldn’t normally reveal the tech behind digital services, so what makes this any different?

My desk research didn’t reveal much evidence about the best way for designers to approach the use of AI – most of what I found talked about chatbots in commercial settings, which is quite different to creating a government service. But one word that came up often was transparency. It seems clear that we should aim to be honest about what we’re doing with AI – this will give our users a sense of control and choice, and allow them to feel included in the service we’re creating.

Being honest with our users

So in normal circumstances, I am fairly sure the last thing any of us care about when we’re looking for information from the government is the tech behind the answer. But, as we worked on the service, and tested each new iteration, we recognised some possible user benefits to being more honest about how we’re using AI, including:

managing expectations about the answers people will get

allowing people to adapt how they interact with the tool

taking the opportunity to educate and build trust in AI technology

Maybe we can simply create a good user experience and be transparent about it - that way, we can also begin to address the fear and suspicion around AI.

What we found in user testing

Initially, we tested the service without any additional context around our use of AI. We found participants were mostly indifferent to how their question was answered. They looked at the service as either a form that would go to a real person to answer several days later, or a search that would bring up a list of results immediately, neither of which set the right expectations.

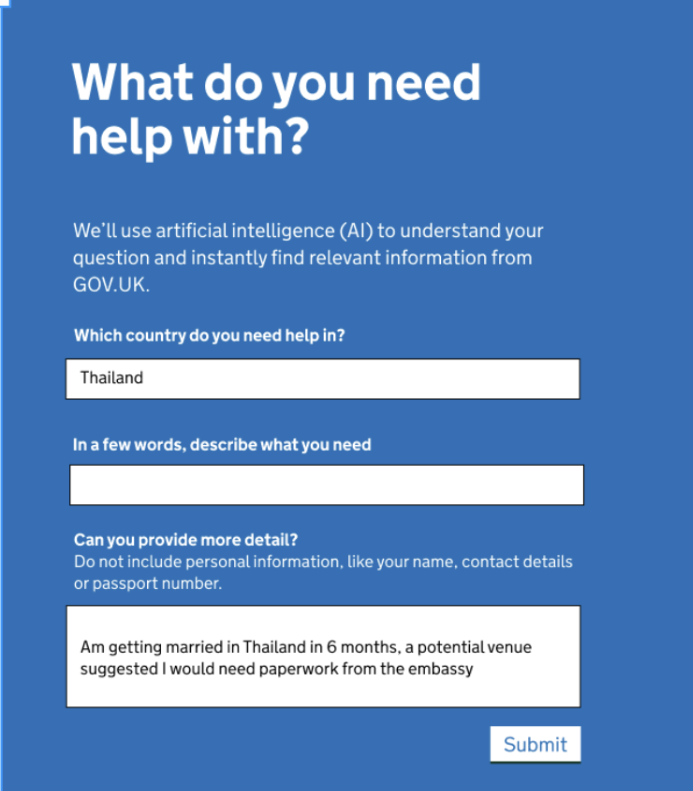

To help correct those expectations, we decided to add in some AI info. Without burdening users with a wall of text, we needed to explain:

who (or what) is answering their question

how long they’ll wait for a reply

where the information is coming from – and that it’s trustworthy

what the answer will look like

After a lot of sticky notes, editing, and trying desperately to avoid the word ‘search’, we came up with this one-liner:

‘We’ll use artificial intelligence (AI) to understand your question and instantly find relevant information from GOV.UK.’

We took this line into user testing. It’s definitely something we’ll keep iterating, according to the way people use the tool and the feedback we get.

When we asked participants how they felt about it being an AI-assisted service, we got a mixed reception, including:

“Oh wow! Government’s using AI, how cool!’

“I don’t know much [about AI] but I know it’s taking people’s jobs away”

“It’s a bit sad but that’s the way the world’s going”

We got a few extreme positive or negative reactions – mainly based on individual experiences with AI, or what they’d chosen to read about it. But, generally, people just accepted it – a reflection of how AI is part of our lives now.

Having the knowledge that this was AI-assisted definitely made a difference to the way participants used the tool. Most understood that key words would affect results and they didn’t need to enter full sentences.

Before they knew it was AI, people entered things like:

"I am in Thailand and I have decided to get married - to a girl from Thailand - is there any specific documentation that I require in order for the marriage to be valid in the uk?”

And once they knew it was AI:

"need information. marrying in Thailand"

It was interesting (and heartwarming) that some still added a ‘please’ and ‘thank you’ for the tool! Watch CYB’s User Researcher, Truly talking more about our testing.

People really just want answers

The overwhelming thing that came out of the testing was that, AI or no AI, people wanted to feel that they’d been understood and were given clear answers to their questions. So, no surprises there.

Although using the pre-approved templates reduces the risk of giving people the wrong answer, it means we’re giving generic information and links, rather than a tailored response. But our participants generally understood and accepted that the tool has limits, and were happy with their answers.

We’ll carry on improving how the tool interprets questions. We’ll make sure the answers it returns are easy to understand, helpful and accessible – the content design staples.

We’ll make a service that’s efficient and reliable, and maybe start to demystify AI a little bit and prove how useful it can be.