Five things I’ve learned from running remote unmoderated usability tests

Mike Green is an Associate User Researcher supporting Caution Your Blast with our work with the Foreign, Commonwealth, and Development Office.

How can you test your designs quickly and reliably with lots of users, but without the cost and time involved in recruiting participants to run in-depth usability testing sessions?

To gather rapid user feedback for a UK government service I have been working on, I have recently started running ‘unmoderated’ usability tests.

Sometimes referred to as ‘asynchronous’ testing, remote unmoderated testing sees the participant complete the tasks unsupervised.

Typically, a participant undertakes one or more short tasks on a particular feature of a product or service, then may be required to answer various questions. Their responses and on-screen actions are recorded for later viewing and analysis by the researcher.

A growing variety of such tools exist in the marketplace, presenting researchers with opportunities to gather rich insight from users quickly, economically and at scale. Nevertheless, tests such as these also bring methodological risks, since without any real-time facilitation researchers are not able to interject and probe further if needed: research shows that participants can be less engaged and behave unrealistically when unsupervised.

What kinds of tests have I run using these tools?

There is a variety of different types of unmoderated tests, and to date I have focused on the two which best meet my current research objectives:

First click test

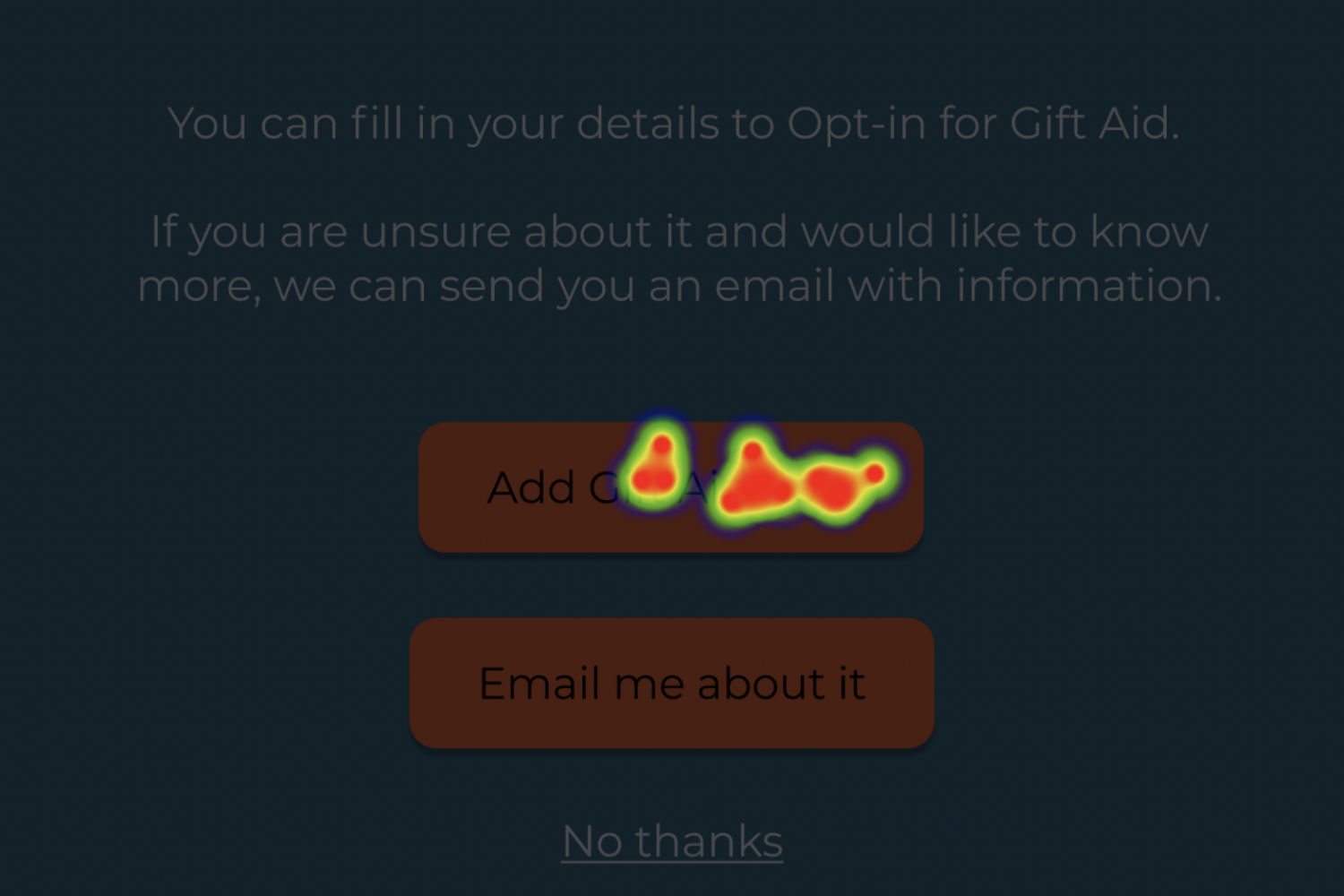

This involves showing a user an interface and asking them where they would click first to carry out a particular task.

In our case, we wanted to test how easily users found the start link to our service within a long and content-heavy ‘gov.uk’ page. Heatmaps of their clicks were generated, which showed that while a proportion of participants correctly located the link, several appeared to struggle and clicked on the wrong part of the page.

This rapid and insightful feedback led to us quickly making various design tweaks and reordering the hierarchy of information within it. We were then able to carry out a second unmoderated test of the revised version the very next day with a further ten participants - we found that the error rate had been reduced.

Preference test

Participants are shown multiple versions of a design and asked to choose which they prefer. This can be used to test choices of image, colour palette, website copy, page layouts, logos, videos - in short, pretty much any aspect of your design. Preference testing allows for feedback early in the process, and produces both quantitative and qualitative feedback.

I have found this type of short, rapid test to be very effective. In one case, having shown ten participants two versions of the same page, nine of them unambiguously chose one version. The online tool we were using noted that this difference was99.0%likely to be statistically significant.

As a follow up, we asked participants to justify their decision and a wordcloud produced from their combined responses indicated that clarity and better comprehension had guided their final choice.

What other kinds of unmoderated tests are there?

Navigation test

Users are presented with a series of screens (which can be anything from wireframes to high-fidelity page designs) to test how easily users can navigate their way through a site. The on-screen location and the timing of their clicks can be recorded. Navigation testing is useful in helping to compare an improved design to the original version.

Five second test

The user is shown a particular page or screen for only five seconds, and is then asked what they can recall. This allows you to gauge their first impression of a design, and how well that design conveys its intended message.

What lessons have I learned so far from using this kind of testing?

Be very clear on what you want to learn before you start

Are you testing navigation on your site? Or are you more interested in branding and the overall message conveyed? Are there specific terms or certain bits of content you want to test?

Make sure you have a clearly defined research objective for your test, or you risk wasting your time through misleading or unhelpful insights. What you want to learn will inform what kind of test you decide to run.

2. Pilot it first

Before you put your planned test live, give it to a few other people to try out, whether real users or even members of your team. Does the test make sense? Does it ‘flow’ in a logical way? Are the instructions clear? Does it meet your research objective? You may find you need to iterate it before launch.

You won’t be able to interject during the test and redirect them if the user starts providing answers to questions you didn’t intend to ask.

3. Consider how and who you’re recruiting

Most remote testing platforms allow you to test with your own participants, or participants from their own recruitment panel. While both options usually have a cost, the latter is significantly cheaper (typically from $1-5 per user, depending on the length and complexity of the test), and therefore can be highly beneficial in terms of cost and the relative speed of finding users to test with.

My own experience of using an online panel was that once I put a test live, I started getting responses almost instantly. Depending on the sample size I needed (usually 10-15 participants per round of research) and the length of the test, I found I usually completed a whole round of testing within minutes, which is remarkable.

To fine-tune your recruitment, you may need to filter by country, age, income level and other variables before you start. Also consider how well your participants can speak English, particularly if your tests require them to study content-rich pages.

4. Interpret your results with caution, and triangulate them with other forms of testing

With no moderator present, it can be hard to gauge actual user engagement in the task. While this form of testing provides major benefits in terms of velocity of gathering user insights, results should therefore be treated with a healthy degree of caution.

Combining this form of testing with other types of user research such as in-depth interviews, analytics or ethnography will provide a powerful triangulation of what you’re learning.

5. Consider running multiple rounds of tests

The beauty of this kind of testing is the speed and ease of putting tests live and getting feedback directly from users. It’s no problem to run a series of tests, either with the same designs or with different iterations, in a matter of days (or even hours).

This type of testing is a straightforward and effective way of gathering specific, targeted user insights, particularly where there is disagreement or lack of clarity about elements of a design.

Nevertheless, care does need to be taken when designing and executing this kind of research to ensure any test delivers clear, cogent and actionable user insights.